Technical uses (e.g. in medical imaging or remote sensing applications) often require more levels, to make full use of the sensor accuracy and to reduce rounding errors in computations. Sixteen bits per sample is often a convenient choice for such uses, as computers manage 16-bit words efficiently. The TIFF and PNG image file formats support 16-bit grayscale natively, although browsers and many imaging programs tend to ignore the low order 8 bits of each pixel. Internally for computation and working storage, image processing software typically uses integer or floating-point numbers of size 16 or 32 bits. In computing, although the grayscale can be computed through rational numbers, image pixels are usually quantized to store them as unsigned integers, to reduce the required storage and computation. Some early grayscale monitors can only display up to sixteen different shades, which would be stored in binary form using 4 bits.

But today grayscale images intended for visual display are commonly stored with 8 bits per sampled pixel. This pixel depth allows 256 different intensities (i.e., shades of gray) to be recorded, and also simplifies computation as each pixel sample can be accessed individually as one full byte. A digital image is nothing more than data—numbers indicating variations of red, green, and blue at a particular location on a grid of pixels. Most of the time, we view these pixels as miniature rectangles sandwiched together on a computer screen. With a little creative thinking and some lower level manipulation of pixels with code, however, we can display that information in a myriad of ways.

This tutorial is dedicated to breaking out of simple shape drawing in Processing and using images as the building blocks of Processing graphics. A color in Processing is a 32-bit int, organized in four components, alpha red green blue, as AAAAAAAARRRRRRRRGGGGGGGGBBBBBBBB. Each component is 8 bits , and can be accessed with alpha(), red(), green(), blue().

This representation works well because 256 shades of red, green, and blue are about as many as the eye can distinguish. Such applications might use a 16-bit integer or even a 32-bit floating point value for each color component. On the other hand, sometimes fewer bits are used. For example, one common color scheme uses 5 bits for the red and blue components and 6 bits for the green component, for a total of 16 bits for a color. We're accessing the Bitmap object called bmp, which holds the image from the PictureBox.

After a dot we have an inbuilt method calledGetPixel. In between round brackets you type the coordinates of the pixel whose colour you want to grab. The first time round our outer loop x will have a value of 0. The first time round our inner loop, y will also have a value of 0. So the first pixel we grab is at 0, 0 from our image, which would be the first white square top, left of our checkerboard image.

The second time round the inner loop the x and y values will be 0 and 1, which would get us the first black square from the left on the top row of the checkerboard image. Because the three sRGB components are then equal, indicating that it is actually a gray image , it is only necessary to store these values once, and we call this the resulting grayscale image. This is how it will normally be stored in sRGB-compatible image formats that support a single-channel grayscale representation, such as JPEG or PNG.

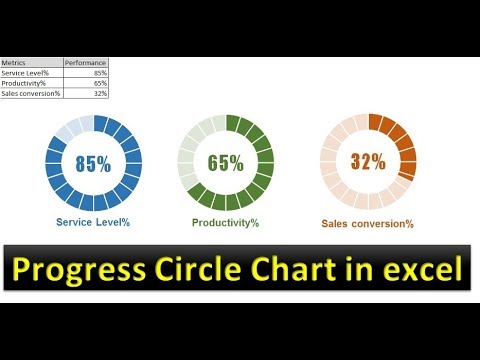

In previous examples, we've seen a one-to-one relationship between source pixels and destination pixels. To increase an image's brightness, we take one pixel from the source image, increase the RGB values, and display one pixel in the output window. In order to perform more advanced image processing functions, we must move beyond the one-to-one pixel paradigm into pixel group processing. The first three columns of each row are the coordinates in color space of the bin center, and the fourth column is the proportion of pixels in that bin.

For example, the first bin, defined by RGB triplet [0.16, 0.10, 0.03], is a very dark brown color, and 6.73e-01 or ~67% of the pixels were assigned to this bin. Two bins with the same set of boundaries may have totally different centers, depending on where the pixels are distributed in that bin. If no pixels are assigned to a bin, its center is defined as the midpoint of the ranges in each channel.

So in row 7, the RGB triplet is [0.17, 0.83, 0.17] because the bounds were 0–0.33, 0.67–1, and 0–0.33 and there were no bright green pixels in the image. You can visualize this using plotClusters(), or several cluster sets at once using plotClustersMulti() . Color images are often built of several stacked color channels, each of them representing value levels of the given channel. For example, RGB images are composed of three independent channels for red, green and blue primary color components; CMYK images have four channels for cyan, magenta, yellow and black ink plates, etc.

Grayscale images, a kind of black-and-white or gray monochrome, are composed exclusively of shades of gray. The contrast ranges from black at the weakest intensity to white at the strongest. Following are two examples of algorithms for drawing processing shapes. Instead of coloring the shapes randomly or with hard-coded values as we have in the past, we select colors from pixels inside of a PImage object. The image itself is never displayed; rather, it serves as a database of information that we can exploit for a multitude of creative pursuits.

Each color component is represented by an integer between 0 and 255. Each component is assigned a consecutive index within the array, with the top left pixel's red component being at index 0 within the array. Pixels then proceed from left to right, then downward, throughout the array. But what if you're comparing a black and white object to a blue and yellow one? Or an all-black object to one with 10 distinct colors? Unlike the histogram method, which uses empty bins to register the lack of a color in an image, k-means only returns clusters which are present in an image.

An RGBA color model with 8 bits per component uses a total of 32 bits to represent a color. This is a convenient number because integer values are often represented using 32-bit values. A 32-bit integer value can be interpreted as a 32-bit RGBA color.

How the color components are arranged within a 32-bit integer is somewhat arbitrary. The most common layout is to store the alpha component in the eight high-order bits, followed by red, green, and blue. (This should probably be called ARGB color.) However, other layouts are also in use. The provided sample source code builds a Windows Forms application which can be used to test/implement the concepts described in this article. The sample application enables the user to load an image file from the file system, the user can then specify the colour to replace, the replacement colour and the threshold to apply.

The following image is a screenshot of the sample application in action. Now, There's something interesting about this image. Like many other visualizations, the colors in each rgb layer mean something. For example, the intensity of the red will be an indication of altitude of the geographical data point in the pixel.

The intensity of blue will indicate a measure of aspect and the green will indicate slope. These colors will help to communicate this information in a quicker and more effective way rather than showing numbers. The advantage of using the high-level access is run-time boundary check, which means that you will never get an access violation problem if you specify the wrong coordinates. Also, there is no need to learn the physical structure of the pixel to access it. The disadvantage of such an approach is its poor performance. If you need to simply change the color of several points, this method is convenient, but we do not recommend using high-level pixel access for intensive pixel processing.

Using an instance of a PImage object is no different than using a user-defined class. First, a variable of type PImage, named "img," is declared. Second, a new instance of a PImage object is created via the loadImage() method. LoadImage() takes one argument, a String indicating a file name, and loads the that file into memory. LoadImage() looks for image files stored in your Processing sketch's "data" folder. Hopefully, you are comfortable with the idea of data types.

You probably specify them often—a float variable "speed", an int "x", etc. These are all primitive data types, bits sitting in the computer's memory ready for our use. It's important to remember that, for both getting and setting pixel data, the byte array expects 32-bit alpha, red, green, blue pixel values.

In between round brackets you first specify the location for your new pixel. Our location is held in the x and y variables, which will be 0, 0 the first time round both loops. After a comma, we type the new colour we want for the pixel in this position. You can minimize the differences by increasing the values of iter.max and nstart, which are passed to the kmeans() function of the stats package. (As you might guess, this makes the function slower.) Unless the image has extremely high color complexity, however, the differences should be minor and in my experience don't affect analyses. Other than the number of clusters, you can also adjust the sample size of pixels on which the fit is performed.

Because k-means is iterative and has to perform a fit multiple times for clusters to converge, fitting hundreds of thousands of pixels is computationally expensive. GetKmeanColors() gets around this by randomly selecting a number of object pixels equal to sample.size, which is set to a default of 20,000 pixels. In any case, the most common color model for computer graphics is RGB. RGB colors are most often represented using 8 bits per color component, a total of 24 bits to represent a color.

This representation is sometimes called "24-bit color." An 8-bit number can represent 28, or 256, different values, which we can take to be the positive integers from 0 to 255. A color is then specified as a triple of integers in that range. Of course, this gives newX and newY as real numbers, and they will have to be rounded or truncated to integer values if we need integer coordinates for pixels. The reverse transformation—going from pixel coordinates to real number coordinates—is also useful. I don't know a lot about image processing in C because I didn't bother with the graphics part of it because none of the programs ran. The firmware will definitely be written in C because that's what we are going to be learning this year, so it would be a sensible choice for the image processing part as well.

The problem with it is that making windows applications in C is not possible, or at least I haven't heard of it. So I either need C++ or C# with a builder because I don't want to code the UI as well. Keep in mind that I still need to have control over the lighting units themselves. I've been looking around the internets and I can't find an answer to my question.

I would like to write a program in c# or c++ that collects displayed data and takes the most commonly displayed colors . Now, if all three values are at full intensity, that means they're 255, it then shows as white and if all three colors are muted, or has the value of 0, the color shows as black. The combination of these three will, in turn, give us a specific shade of the pixel color.

Since each number is an 8-bit number, the values range from 0-255. The typical approach to averaging RGB colors is to add up all the red, green, and blue values, and divide each by the number of pixels to get the components of the final color. If you've just begun using Processing you may have mistakenly thought that the only offered means for drawing to the screen is through a function call.

A line doesn't appear because we say line(), it appears because we color all the pixels along a linear path between two points. Fortunately, we don't have to manage this lower-level-pixel-setting on a day-to-day basis. We have the developers of Processing to thank for the many drawing functions that take care of this business. When displaying an image, you might like to alter its appearance. Perhaps you would like the image to appear darker, transparent, blue-ish, etc. This type of simple image filtering is achieved with Processing's tint() function.

Tint() is essentially the image equivalent of shape's fill(), setting the color and alpha transparency for displaying an image on screen. An image, nevertheless, is not usually all one color. The arguments for tint() simply specify how much of a given color to use for every pixel of that image, as well as how transparent those pixels should appear.

Alternatively, if you simply want to change the color or transparency of a pixel contained within a bitmap, you can use the setPixel() or setPixel32() method. To set a pixel's color, simply pass in the x, y coordinates and the color value to one of these methods. The getPixel() method retrieves an RGB value from a set of x, y coordinates that are passed as a parameter.

If any of the pixels that you want to manipulate include transparency information, you need to use the getPixel32() method. This method also retrieves an RGB value, but unlike with getPixel() , the value returned by getPixel32() contains additional data that represents the alpha channel value of the selected pixel. When changing the appearance of a bitmap image at a pixel level, you first need to get the color values of the pixels contained within the area you wish to manipulate. You use the getPixel() method to read these pixel values. K-means binning usually takes much longer than color histograms, which can make a big difference if you're working with a large set of images or high-resolution images.

If an image has important color variation in a narrow region of color space, k-means may be able to pick up on it more easily than a color histogram would. With k-means, since the two colors form natural clusters, they will be separated if the appropriate number of clusters is provided. If you only note the colors that are present, it's difficult to compare images that have totally different colors. And we can confidently say that 75% of the pixels in the butterfly were in the "black" range we defined. We're treating all pixels in that bin as if they're the same color, but in this case we can say that the simplification doesn't sacrifice important information.

It's pretty accurate to say that the majority of the butterfly is black; that some of the pixels in the black regions are dark brown or dark grey is not meaningful biological variation for this image. Of course, there are situations where those more subtle color variations do matter, in which case you'd need smaller bins to break those pixels up into tighter ranges. For custom image processing code written in C#, we highly recommend to use the methods introduced above, since ImageSharp buffers are discontiguous by default. However, certain interop use-cases may require to overcome this limitation, and we support that. Please read the Memory Management section for more information. You can use CopyPixelDataTo to copy the pixel data to a user buffer.

Note that the following sample code leads to to significant extra GC allocation in case of large images, which can be avoided by processing the image row-by row instead. Vector graphics are somewhat different method of storing images that aims to avoid pixel related issues. But even vector images, in the end, are displayed as a mosaic of pixels.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.